LRU Cache

Design a data structure that follows the constraints of a Least Recently Used (LRU) cache.

What is LRU Cache

LRU It is an algorithm that is used to manage the memory of a computer system by identifying and discarding the least recently used items in order to free up space for new items.

LRU cache is based on Simple recency-based policies, meaning at the time of caching new item if the cache is out of capacity, it Identifies and Discards that least recently used items first to fit in new item in the cache memory.

The idea behind the LRU algorithm is that items that have been used more recently are more likely to be used again in the near future, so they should be kept in the cache. On the other hand, items that have not been used for a long time are less likely to be used again, and therefore can be removed from the cache to make room for new items.

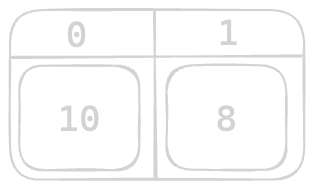

Suppose the Cache Capacity is 2, meaning the cache can contain only 2 items, not more than that. Like this picture bellow.

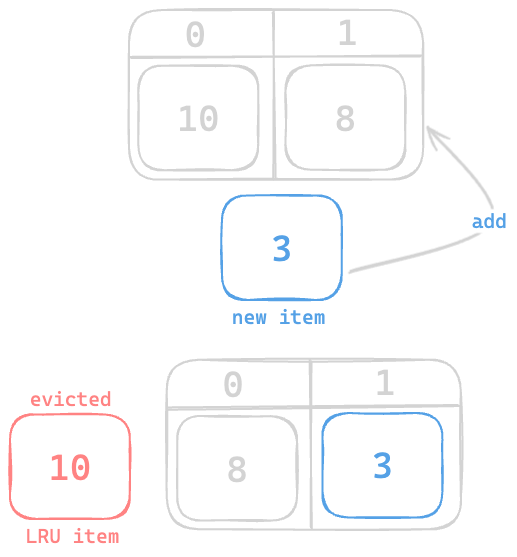

Here 10 is the Least Recently Used item and if a new item suppose 3 needs to be cached the LRU item 10 will be removed and 3 will be inserted like this bellow picture.

Problem Statement

We need to Implement the LRUCache class:

LRUCache(int capacity)Initialize the LRU cache with positive sizecapacity.int get(int key)Return the value of thekeyif the key exists, otherwise return-1.void put(int key, int value)Update the value of thekeyif thekeyexists. Otherwise, add thekey-valuepair to the cache. If the number of keys exceeds thecapacityfrom this operation, evict the least recently used key.

The functions get and put must each run in O(1) average time complexity.

Intuition

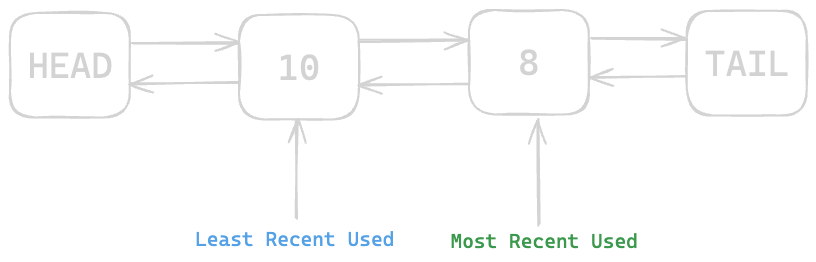

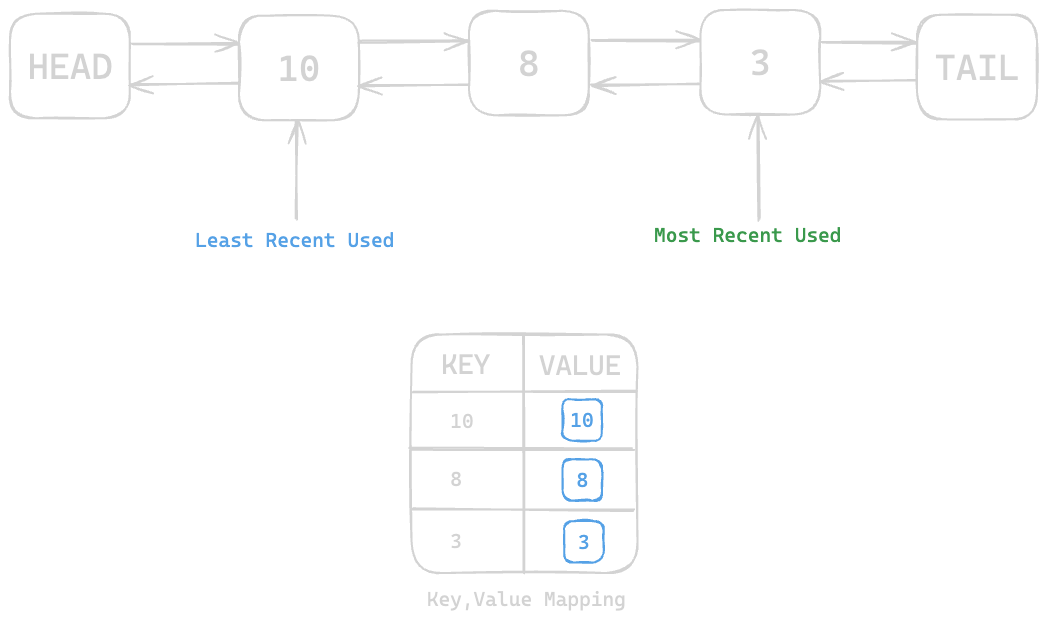

To implement LRU Cache we will use 2 Data Structures such as

- HashMap (To

getitem from the cache inO(1)Time) - Linked List (To

evictLRU item andputnew item inO(1)Time)- identifying

LRUitem inO(1)in a Linked List is simple. It's just the node after theHEADof the Linked List. - Also

putorappendbeforeTAILwill takeO(1)if we use Doubly Linked List

- identifying

Now you could think, why can't we use Array instead of Linked List, because Linked List is dynamic so we don't need to deal with the

indexandsizerather we have to track Least Recent position which is theHEADand Most Recent position which is theTAILnode.

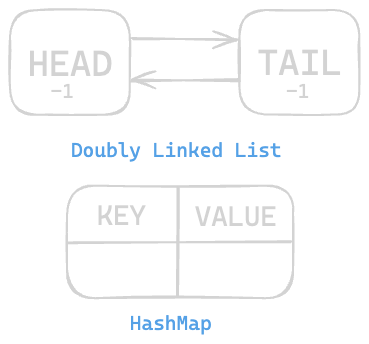

Checkout Design a Doubly Linked List if you have not solved it yet. Now let's prepare the basic structure of the Doubly Linked List.

class Node:

def __init__(self, key: int, val: int):

self.key = key

self.val = val

self.prev = None

self.next = None

class DoublyLinkedList:

def __init__(self):

# The dummy initialization

self.head = Node(key=-1, val=-1)

self.tail = Node(key=-1, val=-1)

# Linking the dummies together

self.head.next = self.tail

self.tail.prev = self.head

class LRUCache:

def __init__(self, capacity: int):

# initiating the data-structures

self.list = DoublyLinkedList()

self.map = {}

self.capacity = capacity

The basic structure is ready it's looking like this

Let's implement the functionalities starting with get function

Get From Cache

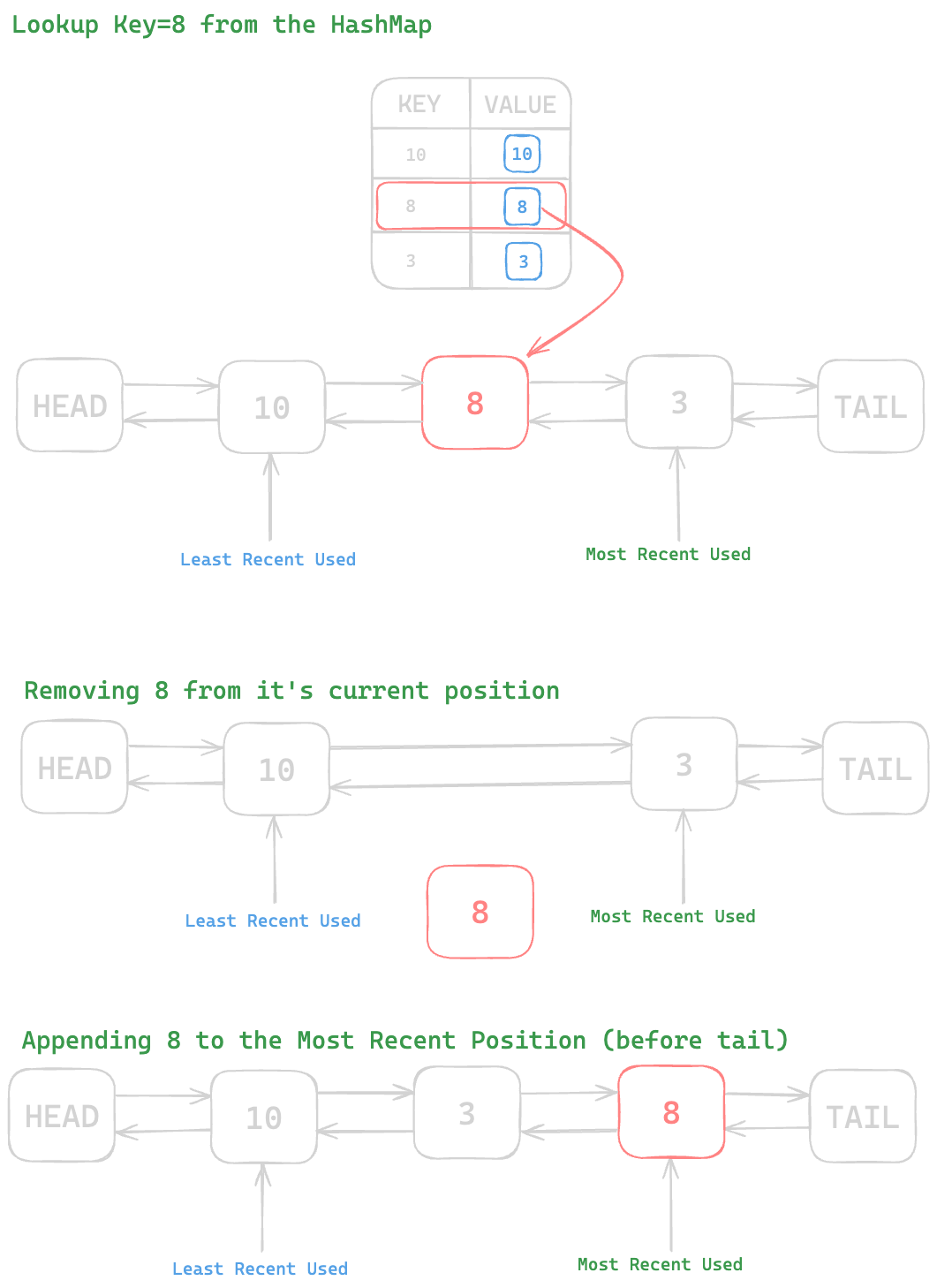

When an item is requested from cache it will be Looked Up by it's key from the HashMap in O(1). The item also be removed from where it is in the Linked List and put back in Most Recent position. Let's illustrate

Suppose we have a Cache with max capacity 3, and we have requested to Get 8 (get(8)). Our algorithm needs to work like this bellow.

- Get the item from HashMap in

O(1)time - Move the requested Node from it's current position to the Most Recent position

Let's code it up

class DoublyLinkedList:

# previous codes are skipped here

def remove(self, node: Node):

prevNode = node.prev

nextNode = node.next

# Link prev and next node to unlink the given node

prevNode.next = nextNode

nextNode.prev = prev

def addAtTail(self, node: Node):

prevNode = self.tail.prev

# connet node with the tail

node.next = self.tail

self.tail.prev = node

# connet node with the prev

node.prev = prevNode

prevNode.next = node

class LRUCache:

# previous codes are skipped here

# Helper function will be used further

def getNode(self, key: int) -> Optional[Node]:

if key not in self.map:

return None

# get node from HashMap

nodeCached = self.map[key]

# moving it to Most Recent position

self.list.remove(nodeCached)

self.list.addAtTail(nodeCached)

return nodeCached

def get(self, key: int) -> int:

nodeCached = self.getNode(key)

return nodeCached.val if nodeCached else -1

Put into Cache

Let's implement the most crucial functionality of a LRU cache put. Here we need to deal with 2 scenarios.

- Item you want to

putis already in the cache. - Item is completely new.

For scenario one, if the givenKEYalready exists in the HashMap we will just update theNODE VALUEwhich is pretty simple. Let's code that part real quick

class LRUCache:

# previous codes are skipped here

def put(self, key:int, value: int):

# if the node exists, the node will be moved to Most Recent position

nodeExisted = self.getNode(key)

if nodeExisted:

# updating the node value

nodeExisted.val = value

else:

# create a new node with given key value and put it into the cache

# you may need to evict the LRU node if the cache capacity exceeds

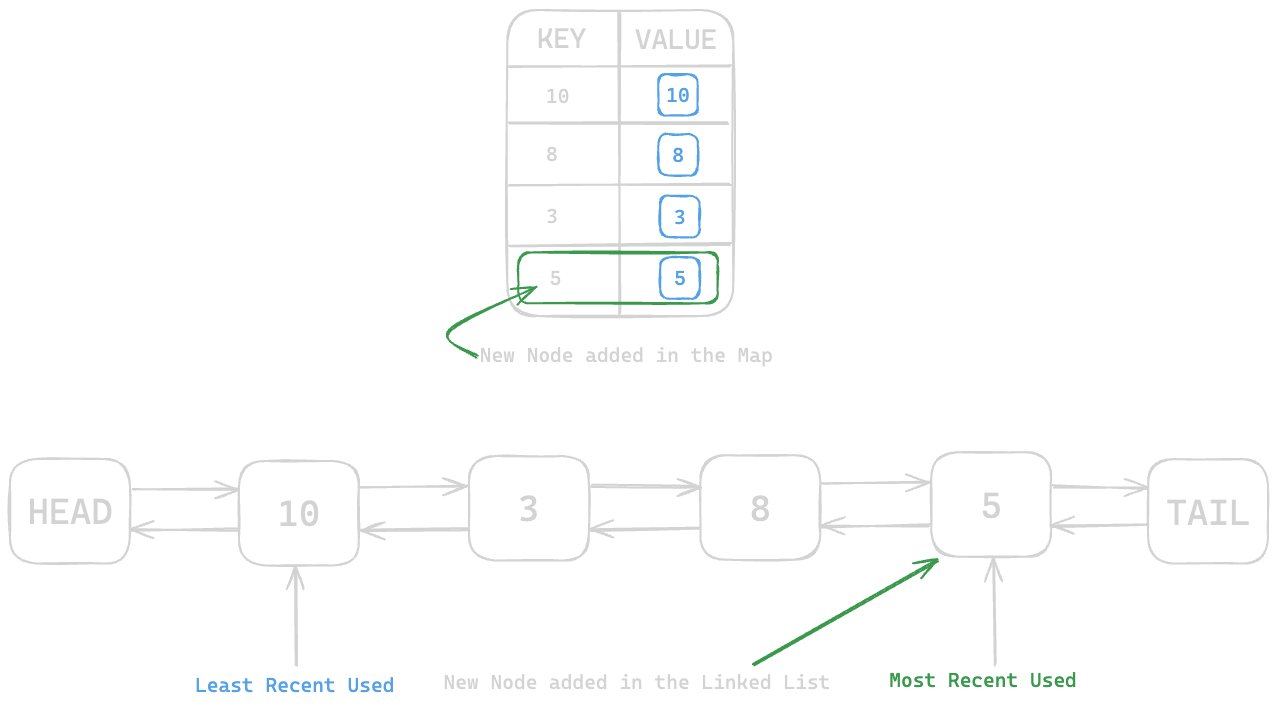

Now for Scenario two, we need to create a new Node and add it to our HashMap for constant lookup. Then the New Node will be appended to at the Tail which is the Most Recent position of the Linked List. Then we need to check the Cache Capacity, the Least Recently Used node will be Evicted if the cache capacity exceeds.

Let's say we are going to add 5 by calling put(key=5, value=5). Let's visualize the process with the given input.

Let's code this part

class LRUCache:

# previous codes are skipped here

def put(self, key:int, value: int):

# previous codes are skipped here

else:

# create a new node with given key value and put it into the cache

newNode = Node(key, value)

self.map[key] = newNode

self.list.addAtTail(newNode)

# you may need to evict the LRU node if the cache capacity exceeds

if len(self.map) > self.capacity:

self.evictLRU()

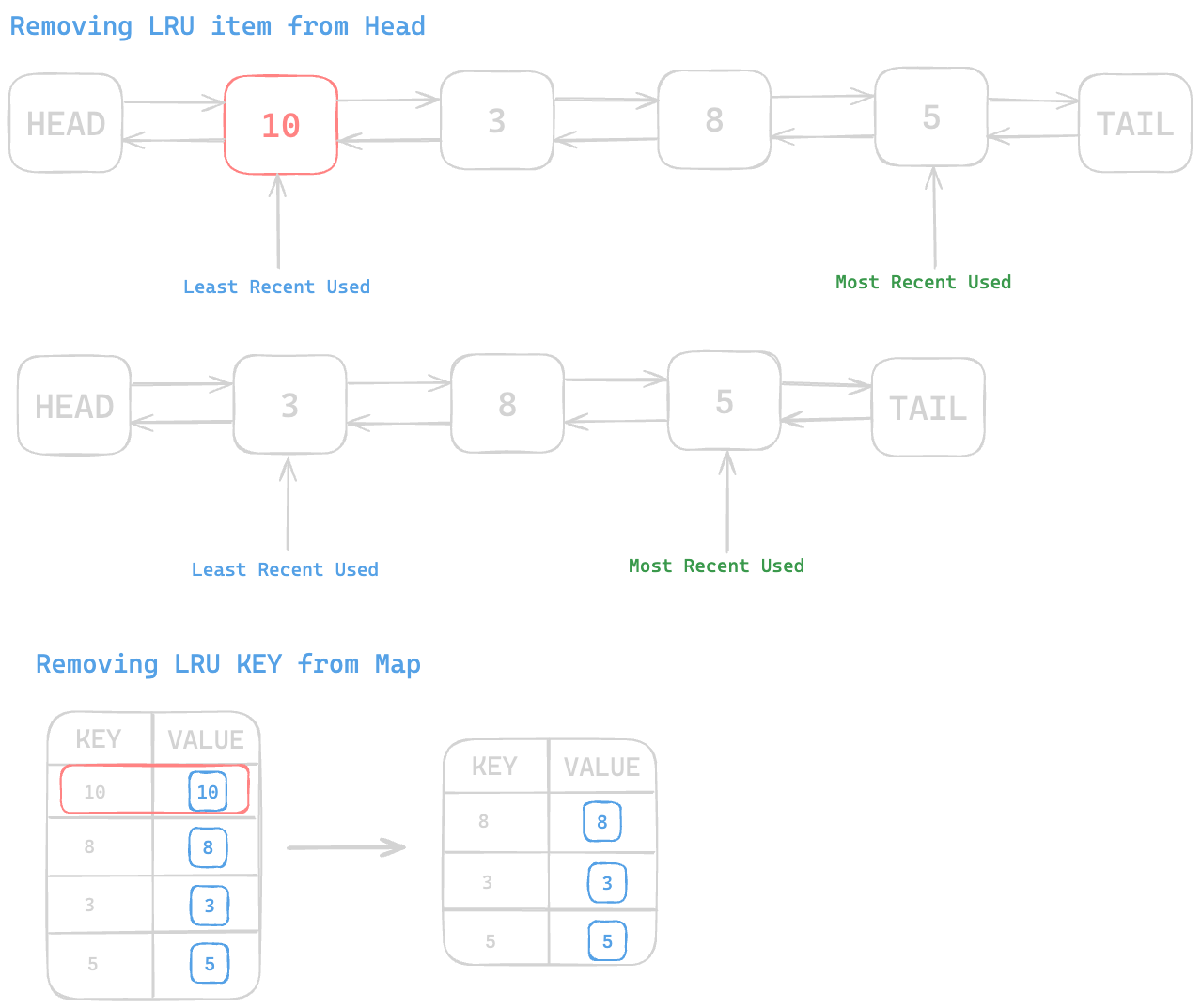

As we can see, after adding the new node the cache capacity got exceeded, so the LRU node (Node after the HEAD position) needs to be evicted from the Linked List as well as from the Map. Let's implement the helper function for eviction.

Let's implement evictLRU function now

class DoublyLinkedList:

def removeFromHead(self) -> Optional[Node]:

nodeAfterHead = self.head.next

# just a safety check

if nodeAfterHead == self.tail:

# nothing to be removed

return None

self.remove(nodeAfterHead)

return nodeAfterHead

class LRUCache:

# Helper Function

def evictLRU(self):

nodeRemoved = self.list.removeFromHead()

if nodeRemoved and nodeRemoved.key in self.map:

# remove key value pair from the map

del self.map[nodeRemoved.key]

That's it. Let's write the whole code altogether.

Complete Code Snippet

class Node:

def __init__(self, key: int, val: int):

self.key = key

self.val = val

self.prev = None

self.next = None

class DoublyLinkedList:

def __init__(self):

# The dummy initialization

self.head = Node(key=-1, val=-1)

self.tail = Node(key=-1, val=-1)

# Linking the dummies together

self.head.next = self.tail

self.tail.prev = self.head

def remove(self, node: Node):

"""

This function removes Node between Two nodes

"""

prevNode = node.prev

nextNode = node.next

# unlink the given node from the Linked List

prevNode.next = nextNode

nextNode.prev = prevNode

def addAtTail(self, node: Node):

"""

This function add the given node before Tail

"""

prevNode = self.tail.prev

# link node with tail

node.next = self.tail

self.tail.prev = node

# link node with previous node

node.prev = prevNode

prevNode.next = node

def removeFromHead(self) -> Optional[Node]:

"""

This function removes Node after Head

"""

nodeAfterHead = self.head.next

# safety check

if nodeAfterHead == self.tail:

return None

# removing node after Head

self.remove(nodeAfterHead)

return nodeAfterHead

class LRUCache:

def __init__(self, capacity: int):

# initiating the data-structures

self.list = DoublyLinkedList()

self.map = {}

self.capacity = capacity

# Helper Function

def getNode(self, key: int) -> Optional[Node]:

if key not in self.map:

return None

nodeCached = self.map[key]

# move the cached node to Most Recent position

self.list.remove(nodeCached)

self.list.addAtTail(nodeCached)

return nodeCached

def get(self, key: int) -> int:

cachedNode = self.getNode(key)

return cachedNode.val if cachedNode else -1

def put(self, key: int, value: int):

nodeExisted = self.getNode(key)

if nodeExisted:

# updating the node value

nodeExisted.val = value

else:

self.writeInCache(key, value)

# Helper Function

def writeInCache(self, key: int, value: int):

newNode = Node(key, value)

self.map[key] = newNode

self.list.addAtTail(newNode)

# check cache capacity

if len(self.map) > self.capacity:

self.evictLRU()

# Helper Function

def evictLRU(self):

nodeRemoved = self.list.removeFromHead()

if nodeRemoved and nodeRemoved.key in self.map:

# removing key value pair from HashMap

del self.map[nodeRemoved.key]

Thanks for Stopping by! Happy Leet coding.

Connect with me on